Google Assistant vs Alexa Development

30 May 2018I’ve been pretty much all-in on the Amazon Echo as my home voice system, and still loving having multiple devices around our home we can call out commands to.

However I’m always looking to expand Yeltzland onto new platforms, so I’ve ported the Alexa skill I wrote about a while back on to the Actions on Google platform.

This is a summary of what I learnt doing that, and my view on the advantages and disadvantages of developing for each platform.

Actions on Google Advantages

Also available on phones

The main advantage of Google Assistant - one I hadn’t realised until I started this, even though it’s actually pretty obvious - is that it’s available on phones as well as the Google Home speaker.

On newer Android phones Google Assistant might be installed out of the box (or can be installed on recent versions), and there is also a nice equivalent iOS app.

I’ve just bought a Google Home Mini to try out, and it’s definitely is comparable to the Echo Dot it sits next too, but I’ve found myself using Google Assistant a lot more on my iPhone than expected.

Visual responses are nicer

Because the Google Assistant apps are so useful, there is a lot more emphasis in returning visual responses to questions alongside the spoken responses.

Amazon does have the Echo Show and the Echo Spot that can show visual card information, but my uneducated guess is they make up a small percentage of the Echo usage.

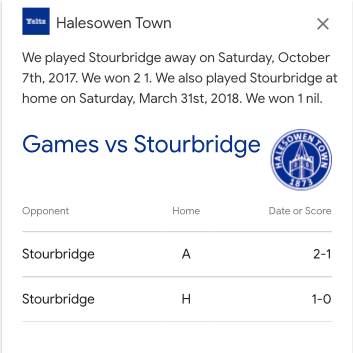

Google offers a much richer set of possible response types, which not unsurprisingly look at lot like search results answers.

In particular, the Table card - current in developer preview - offers the chance to provide really rich response which suit the football results returned by my answer very well.

Nice development environment

Both the Actions on Google console (used for configuring and testing your action), and the Dialogflow browser app (used for configuring your action intents) are really nice to use.

Amazon has much improved their developer tools recently, but definitely a slight win to Google here for me. In particular, for simple actions/skills Dialogflow makes it easy to program responses without needing to write any code.

Using machine learning rather than fixed grammars to match questions to intents

Google states it’s using machine learning to build models that match questions to your stated intents, whereas Amazon expect you to be specific in stating the format of the expected phrasing.

Now from my limited testing - and since I’m basically implementing the same responses on both platforms - it’s hard to say how much better this approach is. However, assuming Google are doing a good job (and with their ML skills it’s fair to assume they are!), this is definitely a better approach.

Allowing prompts for missing slot values

Google has a really nice feature where you can specify a prompt for a required slot if they’ve matched an intent, but not been able to parse the parameter value.

For example, one of my intents is a query like “How did we get on against Stourbridge?” where Stourbridge is an opposition team matched from a list of possible values.

Amazon won’t find an intent if it doesn’t make a full match, but on Google I can specify a prompt like “for what team?” if makes a partial match but didn’t recognise the team name given, and then continue on with the intent fulfilment.

Actions on Google Disadvantages

Couldn’t parse “Yeltzland” action name

A very specific case, and not a common word for sure, but Google speech input just couldn’t parse the word “Yeltzland” correctly. This was very surprising, as I’ve usually found Google’s voice input to be very good but it kept parsing it as something like “IELTS LAND” 😞

You also have to get specific permission for a single work action name - not really sure why that is - so I’ve had to go with “Talk to Halesowen Town” rather than my preferred “Talk to Yeltzland” action invocation. It all works fine on Amazon.

SSML not as good

A couple of my intents return SSML rather than plain text, in an attempt to improve the phrasing of the responses (and add in some lame jokes!).

This definitely works a lot better on the Echo than on Google Assistant.

What about Siri?

All this emphasises how far behind Siri is behind the other voice assistants right now.

Siri is inconsistent on different devices, often has pretty awful results understanding queries, and is only extensible in a few limited domains.

I really hope they offer some big changes in next week’s 2018 WWDC - maybe some integration with Workflow as I hoped for last year, but I really don’t hold much hope any more they can make significant improvements at any sort of speed. Let’s hope I’m wrong.

Conclusion

As you can tell I’m really impressed with Google’s offering here, and definitely seems slightly ahead of Amazon in offering a good development environment for developing voice assistant apps.

In particular, having good mobile apps offering the chance to return rich visual information alongside the voice response is really powerful.

My “Halesowen Town” action is currently in review with Google (as of May 30th, 2018), so all being well should be available for everyone shortly - look out for the announcement on Twitter!

P.S. If you are looking for advice or help in building out your own voice assistant actions/skills, don’t hesitate to get in touch at johnp@bravelocation.com